做动漫头像的网站手机网站后台管理

最近看了点敏捷测试的东西,看得比较模糊。一方面是因为没有见真实的环境与流程,也许它跟本就没有固定的模式与流程,它就像告诉人们要“勇敢”“努力”。有的人在勇敢的面对生活,有些人在勇敢的挑战自我,有些人在勇敢的面对失败与挫折。好吧!他们都实现了“勇敢”,勇敢到底是如何去做,也许说不清楚。或者说每个人都有自己的实践方式。但是他们却同样靠着“勇敢”攻克不自己所面临的困难。当然了,敏捷并不是简单一个词语,经过前人的不探索与总结,还积累与总结相当多的经验可供我们借鉴与参考。

按照本文的主题还是来谈谈软件测试人员的分工吧!主要来谈传统软件测试过程中的测试分工,因为敏捷测试中的测试分工我还没弄明白到底是肿么个情况。

集体测试

也许专业测试里讲这种方式,很可能不叫“集体测试”。因为我根据的自己的理解起了大概符合意思的名词叫集体测试“集体测试”。

就是测试模式就是,公司里所有的测试人员抱成一团儿,来一个项目,所有测试人员就集中测试一个项目。

先说这种分工方式的优点:

1、因为测试团队的中每个成员有都有优缺,人员在工作之中相互取长补短。可以很快的找出软件中的缺陷。三个臭皮匠顶一个诸葛亮,一个经验再丰富的测试不一定有三个水平一般的测从员发现的问题多。

2、人多的另一个好处是测试项目能可以在更快的时间内发现更多人缺陷。总结一下就是更短时间内发现更多的问题。

再来说说这种方式的缺点:

1、一个人员一张嘴,人力成本很长(人员工资,人员平均时间投入,测试机等硬件资源投入)。

2、当同时需要测试多个项目中时,不要意思,按顺序来,请在后面排好队。

3、工作重复,同样一个缺陷,很可以同时被所有测试员发现,或者叫重复率很高。

4、人员水平难以区分,在一个项目测试过程中,有的测人员可能一个缺陷也没找到,有的测试人员却发现了几乎所有的问题。也许这个项目一个缺陷也没找到的测试员在下个项目中却发现了很多缺陷。

5、了漏测现象是整个测试团队的责任。(这也不是明确的缺点,要看团队的氛围是积极的还是消极的。)

(也许,你说想照这么个分析法是不是漏了太多东西,也许你有兴趣继续看下去话,我后面会讲解。)

好吧!集体测试缺点太多,就像国家成立初期的“吃大锅饭”,肯定是阻碍发展的。那我们来看看几种分工方式。

按测试内容分工

一个项目的测试包括文档测试,易用性测试,逻辑功能测试,界面测试,配置和兼容等多个方面。我们可以根据人员的特点为每个人员分配不同的测试内容。

内容分工方式的优点:

1、分工明确,每位人员都清楚自己的测试的内容重点。

2、责任到位,通过漏测的缺陷就可明确是谁的责任。

按测试流程划分

我们的项目测试流程一般需要,制定测试计划,编写测试用例,执行测试用例,输出测试报告等工作,我们可以根据流程中的各个阶段来进行划分。

不同的人员负责不同测试阶段的工作。

优点:

1、流程清晰,就像瀑布试项目开发流程,不同阶段的工作由不同的人员担任。

2、划分流程的每个阶段难易程度和所需要的技能。

编写测试计划人员需要对整个项目的工作时间、资源分配,测试内容,实施过程有整体的把控能力。

用例辨析人员,需要对项目需求,测试方法,测试点有深入的了解。

用例执行人员需要细心,使用缺陷系统,沟通,协助研发定位缺陷。

输出测试报告人员需要对项目的测试过程,缺陷数量,类型,分布。用例执行请况等进行统计。也可以由测试执行人员担任。

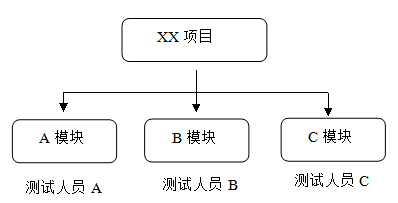

按项目模块划分

对中大型的项目,这种划分就非常必要了,项目的模块非常多,功能也非常多。不同的测试人员负责不同模块的功能,这样会使用测试工作变得更加清晰。

1、人员利用率高,为什么这么说呢? 不同的人员负责的功能不一样。工作就不会存在交叉与重复。

2、更容易挖掘深度缺陷,假如A人员今天测试这个功能,明天测试那个功能,他就不可以对被测功能内部逻辑与业务有深入有了解。找到的也只是很表面的缺陷。那么如果一个人员长期负责一个模块的功能,那么就会更容易发现更有深度的缺陷。而往往深度的缺陷是致命的。

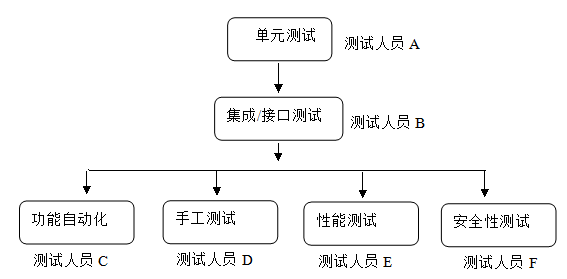

按照测试类型分工

我们知道软件除了功能需要测试以外,软件在编码阶段需要单元测试,接口测试等,在系统测试阶段,为提高功能测试的效率,可能对某些模块进行功能自动化,我们还要考虑软件的性能、安全性等问题。这些类型也是我项目中最常见的分类。我们可以根据这些类型为测试人员分配测试工作。当然,其专业性对测试人员的要求也比较高。

这种分工方式的特点。

1、专业技能要求较高,在这些分类中除了手工测试要求较低外(表面看是这样的),其它分类都需要较高的专业技能。例如,安全性测试需要掌握网络协议,编程技术,脚本攻击,SQL注入,漏洞分析等方面的技能。

2、不同分类之间交互性低,正国为不同分类需要的技能不同,虽然同为“测试”工作,但一个做单元测试的人就无法让其去做性能测试。

上面分类方式的疑问

看了上面的几种分工方式,你是不是每一种测试人员分工方式都似曾相识,但又没有哪个公司是单一的按照上述某种分工作方式工作。

拿笔者目前所在的公司,是一个长期的互联网产品,产品功能比较多,每位测试人员负责不同的功能模块,测试员人员从测试计划到测试报告都基本由一个人来完成。当然对于比较大和紧急的版本迭代,也会多人协作对版本进行测试(协作的方式一般更将版本功能再次细分到每个人员身上)。安全测试由专业的安全人员指导功能测试人员对自己负责的功能进行安全扫描与分析。有独立的性能测试小组,对需要进行性能的产品版本进行性能测试。在独立的功能自动化人员,对于适合自动化的功能进行自动化工作。

笔者公司的分工作方式几乎包括了上面所有的分工方式。那么,我为什么要进行上面那么单一的分工方式划分呢?这样有助于我们理清对测试工作的各种分工方式。在实际的工作中,有大型项目,有小型项目,有客户端软件,也有互联网产品,有短到几天的项目,也有“永久”性的项目。有一次开发完成交付的,也有不段迭代更新的。根据项目的情况,我们可以可以选择合适的分工方式来应用于项目中。

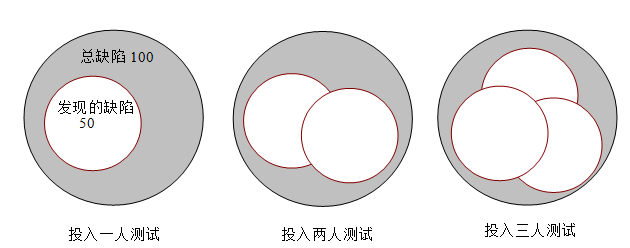

投入人员与发现缺陷的关系

在人员分工时,这也是一个必须也要考虑问题,对一个项目,投入的人员数量,投入的时间,与发现缺陷的数量有密切的关系。

投入时间与发现缺陷的关系:

在人员一定的情况下,投入的时间越多,发现的缺陷越多。但有一个规律,越到后期发现的新缺陷越少。假设软件总缺陷为100个,第一周发现50个问题,第二新发现20个,第二周可能只发现10个新缺陷。而且一个必然的结果是,测试不可能发现所有的缺陷。

投入人员数量与缺陷的关系:

在时间一定有的情况下,投入的人员越多,发现的问题越多,从图中可以看出,投入的人员越多,人员发现缺陷的重叠度越高。当然,你可以说,把每个人员要测试的内容划分清晰就不会重叠了。做为一个系统的各个功能模块,他们之间肯定存在必然的联系。有可能A人员在测试时会涉及到B人员测试的功能,并且发现了问题,不管是告诉B缺陷还是A人员直接提交缺陷(当然,你也可以装作没看到,等着B去发现),这都算不可避免的重叠。

当然了,划分更清晰的任务有效的降低重叠度。同步也降低了发现缺陷的数量,提高项目风险。除非投入更多的时间测试。这之间的关系,需要测试管理者认真去权衡。

在项目不紧急测试时间充分的情况下,可以投入更少的人员,延长测试时间发现更多的缺陷。 在项目紧急的情况下,需要投入更多的人员测试,以便尽快的发现更多的缺陷。在项目质量要求很高的情况下需要投入更多的人员与时间进行测试。在测试时间少,项目质量要求不高的情况下,可以投入较少的人员与时间进行测试。

--------------------------------------------------------------------------------------

本文结束,但还有许多问题我没有讲清楚(或者,我目前还说不清楚)。

1、A人员发现了b功能模块的缺陷(b模块由B人员负责测试)应该如何处理? 自己提缺陷单 ,告诉B人员,让B人员提单。直接忽视,等着B测试人员去发现。

2、项目紧急情况,人员投入,时间投入,某些情况下,考虑某些模块不进行测试。

3、测试人员的发展职业发展,这与测试人员的分工有着密切的联系。

Python接口自动化测试零基础入门到精通(2023最新版)