定制型网站一般价格北京房子

前言:经常使用Objects.equals(a,b)方法的同学 应该或多或少都会因为粗心而传错参, 例如日常开发中 我们使用Objects.equals去比较 status(入参),statusEnum(枚举), 很容易忘记statusEnum.getCode() 或 statusEnum.getVaule() ,再比如 我们比较一个订单code时 orderCode(入参),orderDTO(其它业务对象) 很容易忘记orderDTO.getCode() 因为Objects.equals()两个参数都是Object类型,idea默认不会提示告警, 如果经常使用该方法 你一定很清楚的明白我在说什么。

针对以上痛点,博主编写了一个idea插件: Equals Inspection , 插件代码本身很简单,极其轻量级。难得的是 在目前暂没发现有人做了这件事时,而博主想到了可以通过IDE告警方式去解决问题,并且实际行动了。此外,知道该用什么API本身是件不容易的事,而阅读代码时,已经处于一个上帝视角,则会显得非常简单。

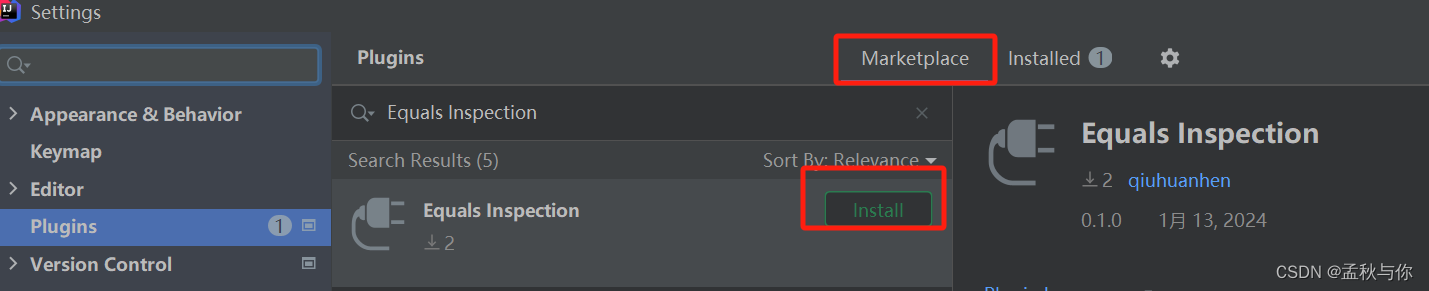

下载一(推荐):

idea插件市场搜索: Equals Inspection

下载二:

github : https://github.com/qiuhuanhen/objects-equals-inspect/releases 安装方式:1.idea内插件下载会自动安装 2. github下载直接将jar包拖进idea,重启idea

Q:为什么是IDE插件层面,而不是在java项目中重写一个工具类 针对类型判断呢?

A:

1.效率问题:java项目代码判断 说明要执行到该方法时才能校验,很多时候我们编写完,在测试环节被提了bug,我们自己断点执行一遍,才能发现传错了参,插件层面在我们编写时即可提醒,节省了大量时间.

2.设计问题: 很重要的一点,java项目层面的提示,要怎么提示?throw new RuntimeExection? 显然不太合理吧,例如在设计一些框架/通用代码时,类型就是可以不一致 难道抛一个异常阻止程序运行吗?插件尽管会提示报错,但它归根结底都只是一个样式,并不会阻止程序运行。

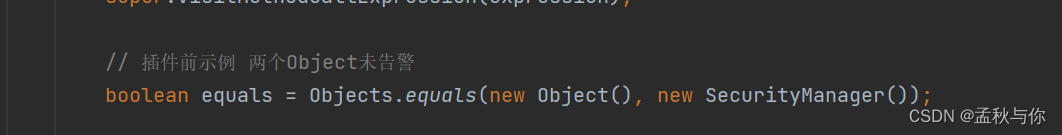

使用前后效果对比:

使用前没有告警

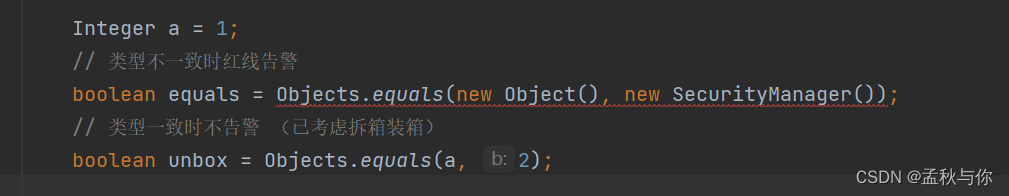

使用后示例:

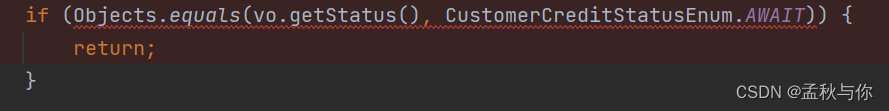

vo.getStatus(Integer类型)与枚举比较时,能直接提示我们类型不一致

(正确写法是StatusEnum.AWAIT.getValue() 与枚举类型的值进行比较)

以下就以博主编写的插件为例 ,写一篇如何编写idea插件的教程

注:本文由csdn博主 孟秋与你 编写,如您在其它地方看到本文,很可能是被其它博主爬虫/复制过来的,文章可能会持续更新,强烈建议您搜索:孟秋与你csdn 找到原文查看

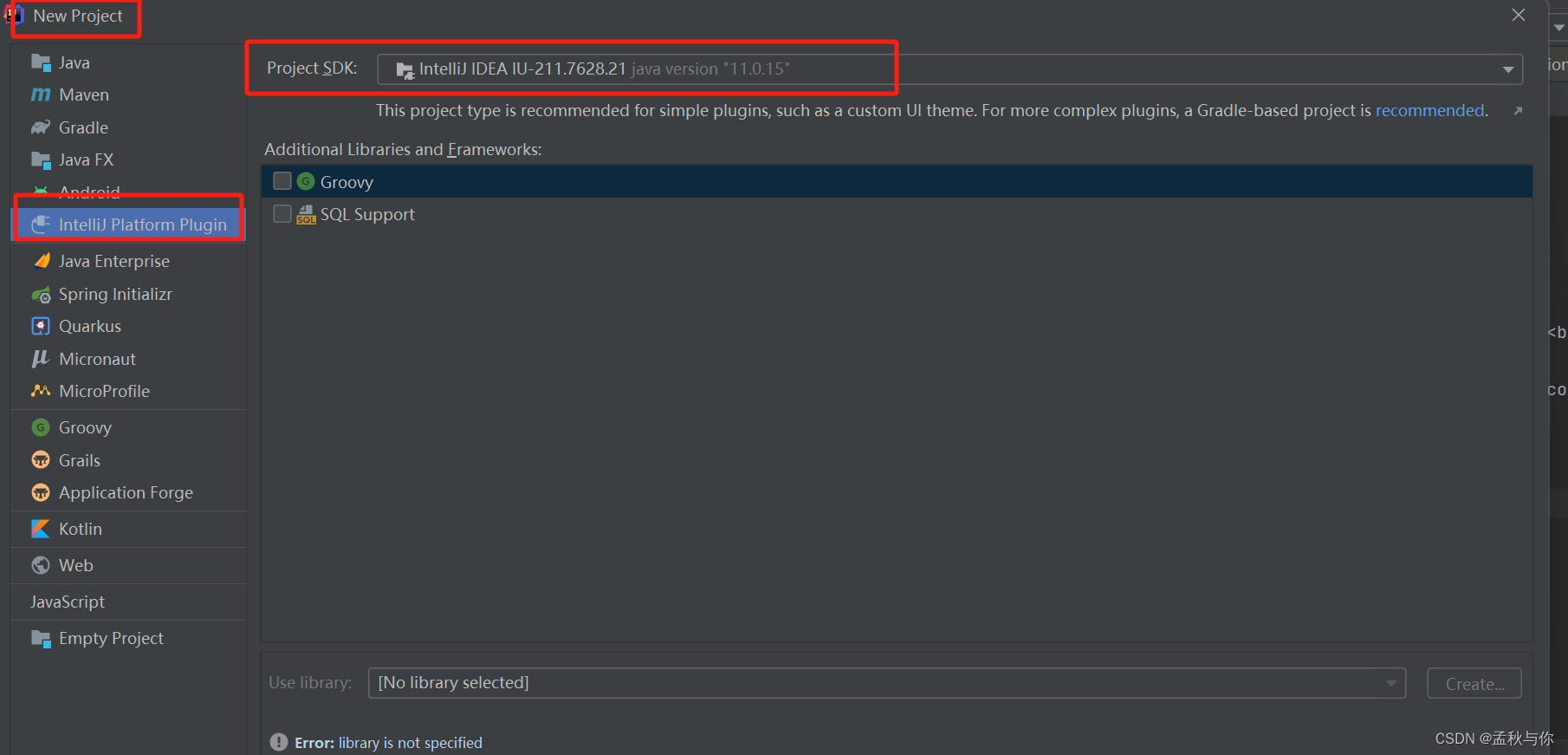

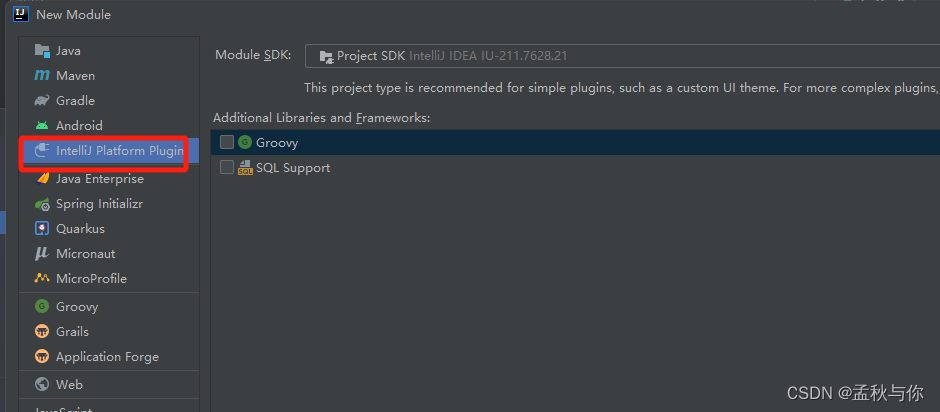

第一步:创建插件项目

tips:

1.需要jdk11

2.以idea2021.1版本为例,不同idea版本可能有所差异

new project ->IntelliJ Platform Plugin-> Project SDK (需要jdk11)

第二步:修改plugin.xml文件内容及创建java代码

其中plugin.xml的description节点,需要40个字符以上,否则插件上传时会报错。

编写插件的核心难点在于,我们不知道idea的api是什么,什么情况用什么api。

以下是可以参考的几点:

- idea官方文档 https://plugins.jetbrains.com/docs/intellij/welcome.html

- github idea 示例项目:https://github.com/JetBrains/intellij-sdk-code-samples

<idea-plugin><id>csdn-mengqiuyuni</id><name>Equals参数类型检查</name><version>0.1.0</version><vendor email="1005738053@qq.com" url="https://blog.csdn.net/qq_36268103"> </vendor><description><![CDATA[<li>check java.lang.Objects equals method params type ,if not match,idea will show the error line</li><br><li>could reduce Objects.equals(a,b) error params type by mistake</li><br><li>notice:this plugin can only inspect your code,it's just reminder java developers,but don't impact code execution,and never change or fix your code.</li>]]></description><change-notes><![CDATA[<li>github:qiuhuanhen,project name :objects-equals-inspect</li><br><li>beta version.csdn:孟秋与你</li><br><li>the first beta version,welcome,update date:2024.01.09</li>]]></change-notes><vendor>qiuhuanhen</vendor><!-- please see https://plugins.jetbrains.com/docs/intellij/build-number-ranges.html for description --><idea-version since-build="173.0"/><!-- please see https://plugins.jetbrains.com/docs/intellij/plugin-compatibility.htmlon how to target different products --><depends>com.intellij.modules.platform</depends><depends>com.intellij.java</depends><depends>com.intellij.modules.java</depends><extensions defaultExtensionNs="com.intellij"><localInspectionlanguage="JAVA"displayName="Title"groupPath="Java"groupBundle="messages.InspectionsBundle"groupKey="group.names.probable.bugs"enabledByDefault="true"level="ERROR"implementationClass="com.qiuhuanhen.ObjectsEqualsInspection"/><!--java类所在路径--></extensions></idea-plugin>package com.qiuhuanhen;import com.intellij.codeInspection.LocalInspectionTool;

import com.intellij.codeInspection.ProblemHighlightType;

import com.intellij.codeInspection.ProblemsHolder;

import com.intellij.psi.*;

import org.jetbrains.annotations.NotNull;/*** @author qiuhuanhen*/

public class ObjectsEqualsInspection extends LocalInspectionTool {@NotNull@Overridepublic PsiElementVisitor buildVisitor(@NotNull ProblemsHolder holder, boolean isOnTheFly) {return new MyVisitor(holder);}private static class MyVisitor extends JavaElementVisitor {private final ProblemsHolder holder;public MyVisitor(ProblemsHolder holder) {this.holder = holder;}@Overridepublic void visitMethodCallExpression(PsiMethodCallExpression expression) {super.visitMethodCallExpression(expression);String methodName = expression.getMethodExpression().getReferenceName();if ("equals".equals(methodName)) {PsiExpressionList argumentList = expression.getArgumentList();PsiExpression[] expressions = argumentList.getExpressions();if (expressions.length == 2) {PsiType arg1Type = expressions[0].getType();PsiType arg2Type = expressions[1].getType();// 都为空 不做提示 注:即使idea会提示 type不为空 ,为防止插件报NPE 还是有大量的非空判断if (null == arg1Type && null == arg2Type) {return;}// 其一为空 给出错误提示if (null == arg1Type || null == arg2Type) {holder.registerProblem(expression, "Objects.equals parameters are not of the same type.", ProblemHighlightType.GENERIC_ERROR_OR_WARNING);return;}// 这是忽视某些通用类,框架 用于比较反射class类型的情况if (arg1Type.getCanonicalText().contains("Class") && arg2Type.getCanonicalText().contains("Class")) {return;}if (isByte(arg1Type) && isByte(arg2Type)) {return;}if (isShort(arg1Type) && isShort(arg2Type)) {return;}if (isInt(arg1Type) && isInt(arg2Type)) {return;}if (isLong(arg1Type) && isLong(arg2Type)) {return;}if (isFloat(arg1Type) && isFloat(arg2Type)) {return;}if (isDouble(arg1Type) && isDouble(arg2Type)) {return;}if (isChar(arg1Type) && isChar(arg2Type)) {return;}if (isBoolean(arg1Type) && isBoolean(arg2Type)) {return;}if (!arg1Type.equals(arg2Type)) {holder.registerProblem(expression, "Objects.equals parameters are not of the same type.", ProblemHighlightType.GENERIC_ERROR_OR_WARNING);}}}}private boolean isInt(PsiType type) {PsiPrimitiveType unboxedType = PsiPrimitiveType.getUnboxedType(type);if (PsiType.INT.equals(unboxedType)) {// 是 int 类型return true;}return PsiType.INT.equals(type) || "java.lang.Integer".equals(type.getCanonicalText());}private boolean isLong(PsiType type) {PsiPrimitiveType unboxedType = PsiPrimitiveType.getUnboxedType(type);if (PsiType.LONG.equals(unboxedType)) {// 是 long 类型return true;}return PsiType.LONG.equals(type) || "java.lang.Long".equals(type.getCanonicalText());}private boolean isDouble(PsiType type) {PsiPrimitiveType unboxedType = PsiPrimitiveType.getUnboxedType(type);if (PsiType.DOUBLE.equals(unboxedType)) {return true;}return PsiType.DOUBLE.equals(type) || "java.lang.Double".equals(type.getCanonicalText());}private boolean isFloat(PsiType type) {PsiPrimitiveType unboxedType = PsiPrimitiveType.getUnboxedType(type);if (PsiType.FLOAT.equals(unboxedType)) {return true;}return PsiType.FLOAT.equals(type) || "java.lang.Float".equals(type.getCanonicalText());}private boolean isBoolean(PsiType type) {PsiPrimitiveType unboxedType = PsiPrimitiveType.getUnboxedType(type);if (PsiType.BOOLEAN.equals(unboxedType)) {return true;}return PsiType.BOOLEAN.equals(type) || "java.lang.Boolean".equals(type.getCanonicalText());}private boolean isByte(PsiType type) {PsiPrimitiveType unboxedType = PsiPrimitiveType.getUnboxedType(type);if (PsiType.BYTE.equals(unboxedType)) {return true;}return PsiType.BYTE.equals(type) || "java.lang.Byte".equals(type.getCanonicalText());}private boolean isChar(PsiType type) {PsiPrimitiveType unboxedType = PsiPrimitiveType.getUnboxedType(type);if (PsiType.CHAR.equals(unboxedType)) {return true;}return PsiType.CHAR.equals(type) || "java.lang.Char".equals(type.getCanonicalText());}private boolean isShort(PsiType type) {PsiPrimitiveType unboxedType = PsiPrimitiveType.getUnboxedType(type);if (PsiType.SHORT.equals(unboxedType)) {return true;}return PsiType.SHORT.equals(type) || "java.lang.Short".equals(type.getCanonicalText());}}

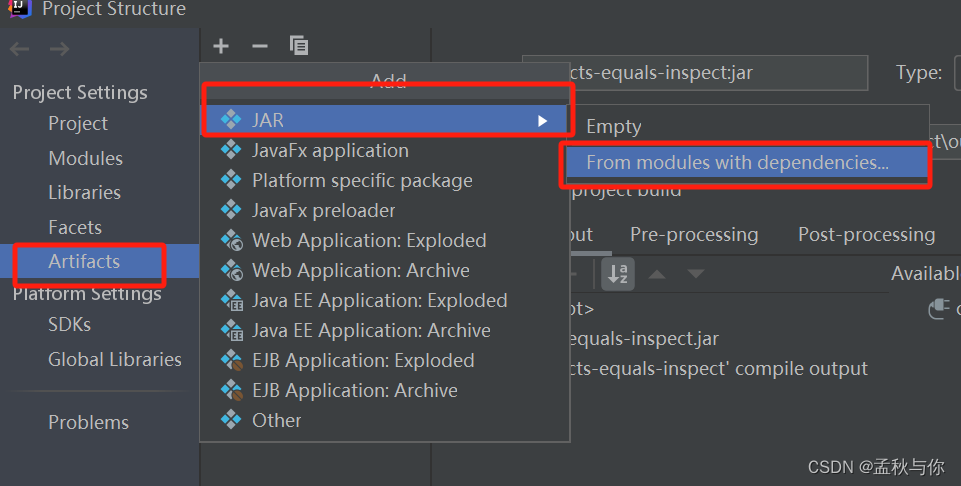

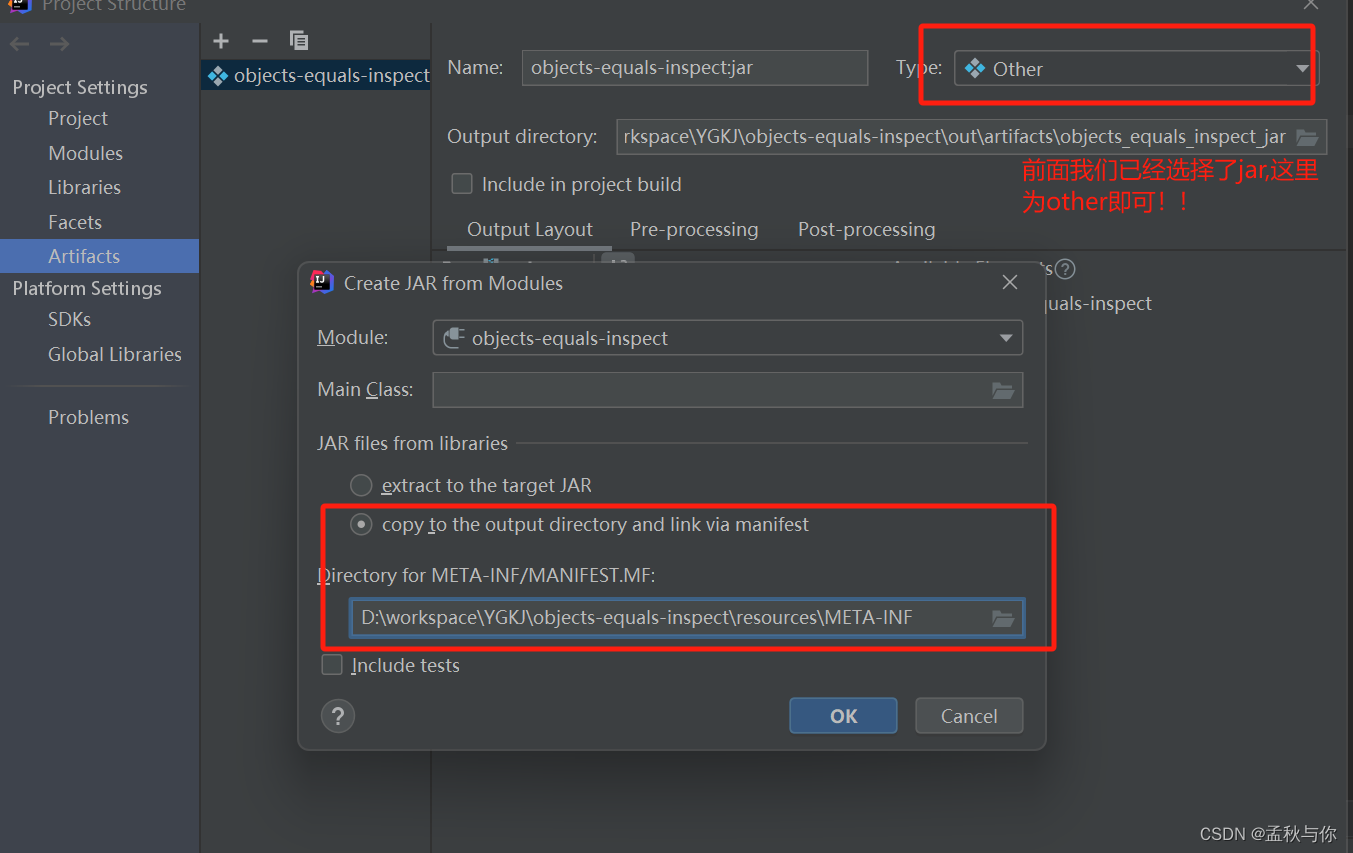

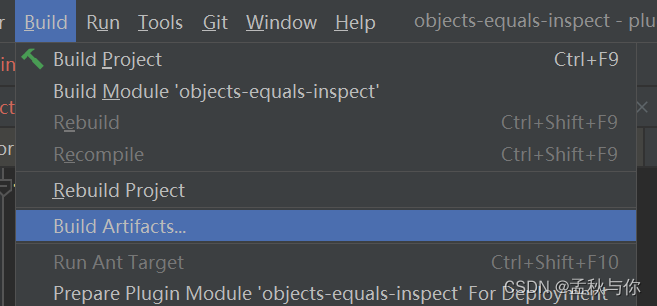

}第三步:打成jar包

没有main方法则不用选择main class

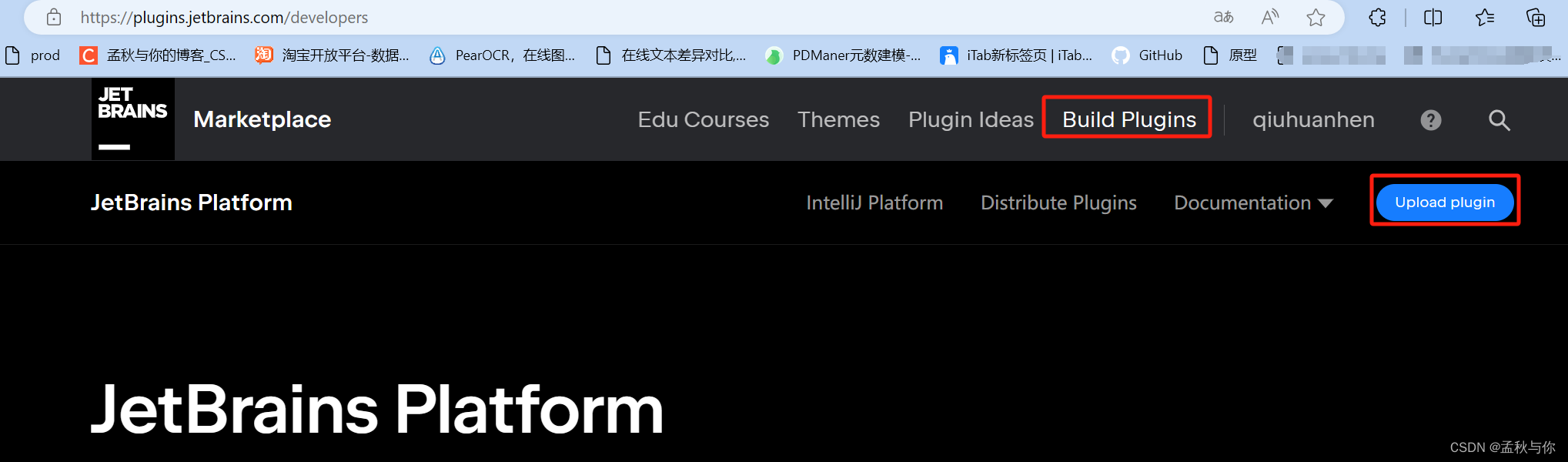

第四步:发布插件

发布插件没有什么难点,唯一值得注意的是description非常严格,必须要40个字符以上,且不能有非拉丁字符,博主在反复修改后发现不能有任何中文,最后把description里面的中文都改成了英文。

插件项目源码地址:https://github.com/qiuhuanhen/objects-equals-inspect

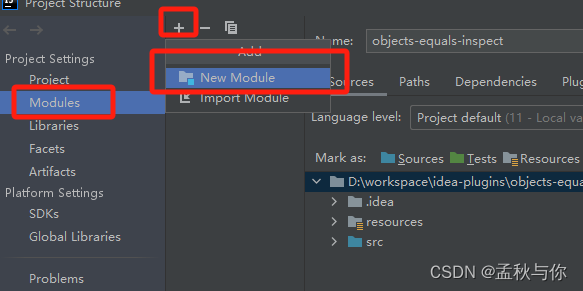

打开项目方式:

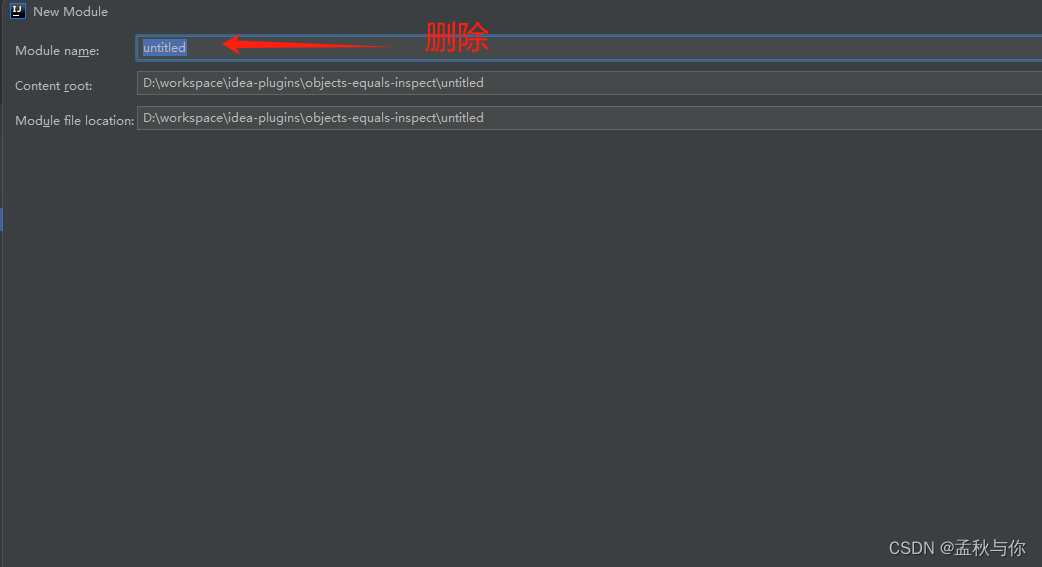

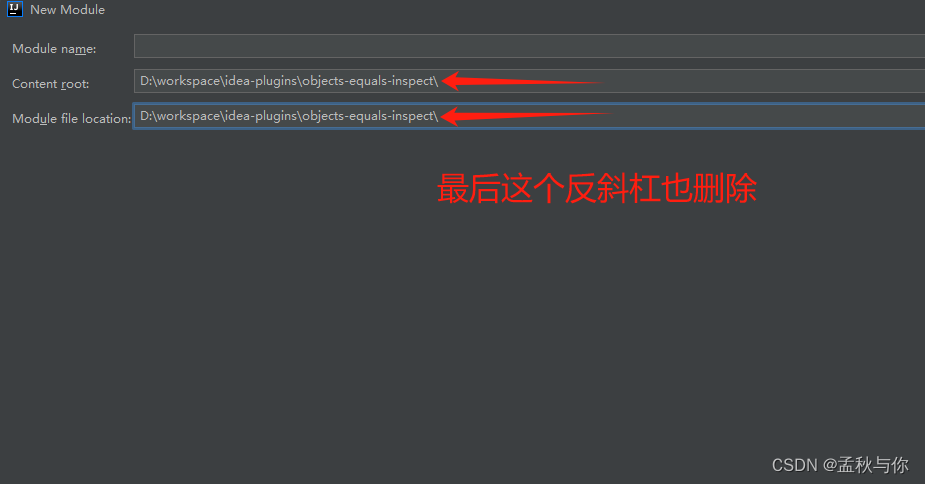

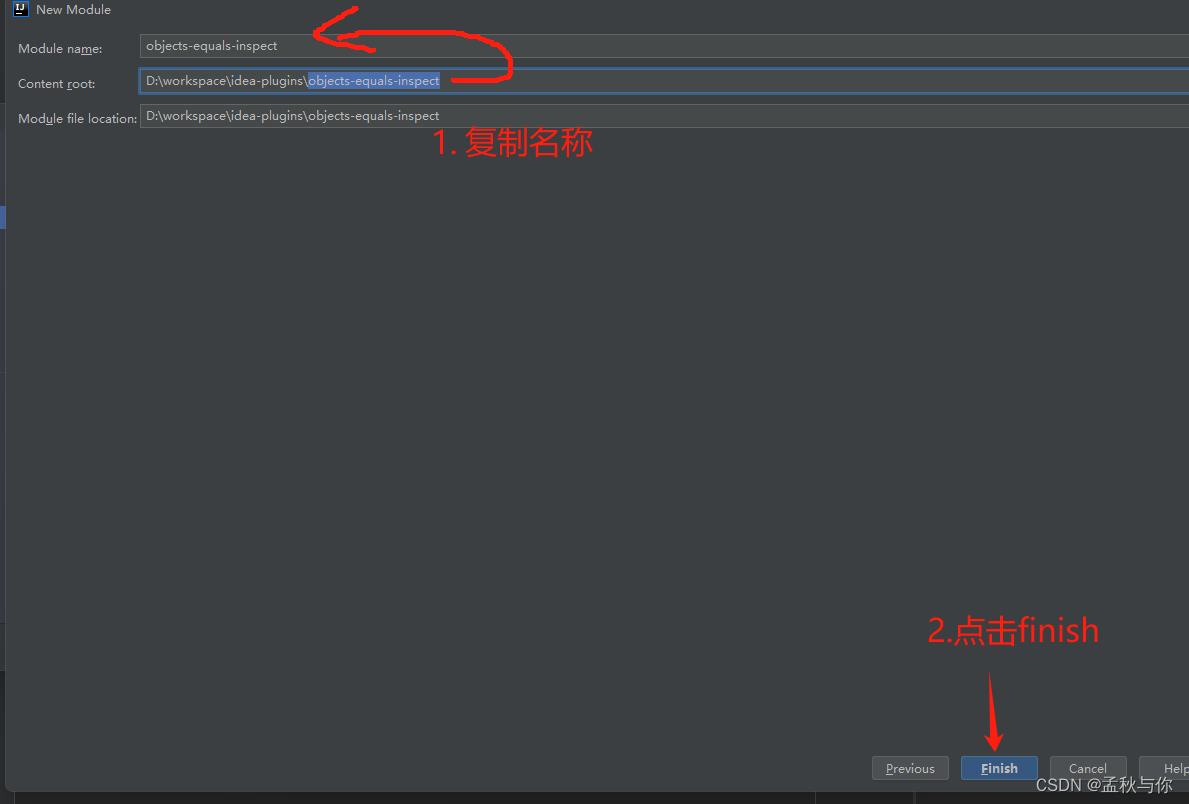

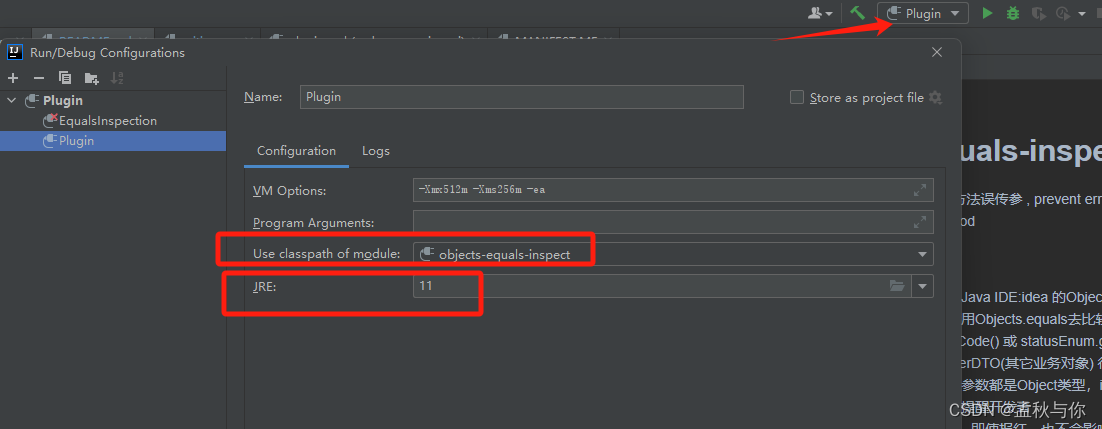

在github下载博主的项目,idea打开后 默认是常规项目,这时我们需要稍作处理

最后一步:

最后: 原创不易 ,欢迎各位在idea插件商店下载 Equals Inspection , 给github项目点上star 非常感谢各位