网站开发用用什么语言最好php个人网站模板

传送门 点击返回 ->AUTOSAR配置与实践总目录

AutoSAR配置与实践(基础篇)2.5 RTE对数据一致性的管理

- 一、 数据一致性问题引入

- 二、 数据一致性的管理

- 2.1 RTE管理 (SWC间)

- 2.2 中断保护 (SWC内)

- 2.3 变量保护IRVS (SWC内)

- 2.4 Task分配

- 2.5 任务抢占控制

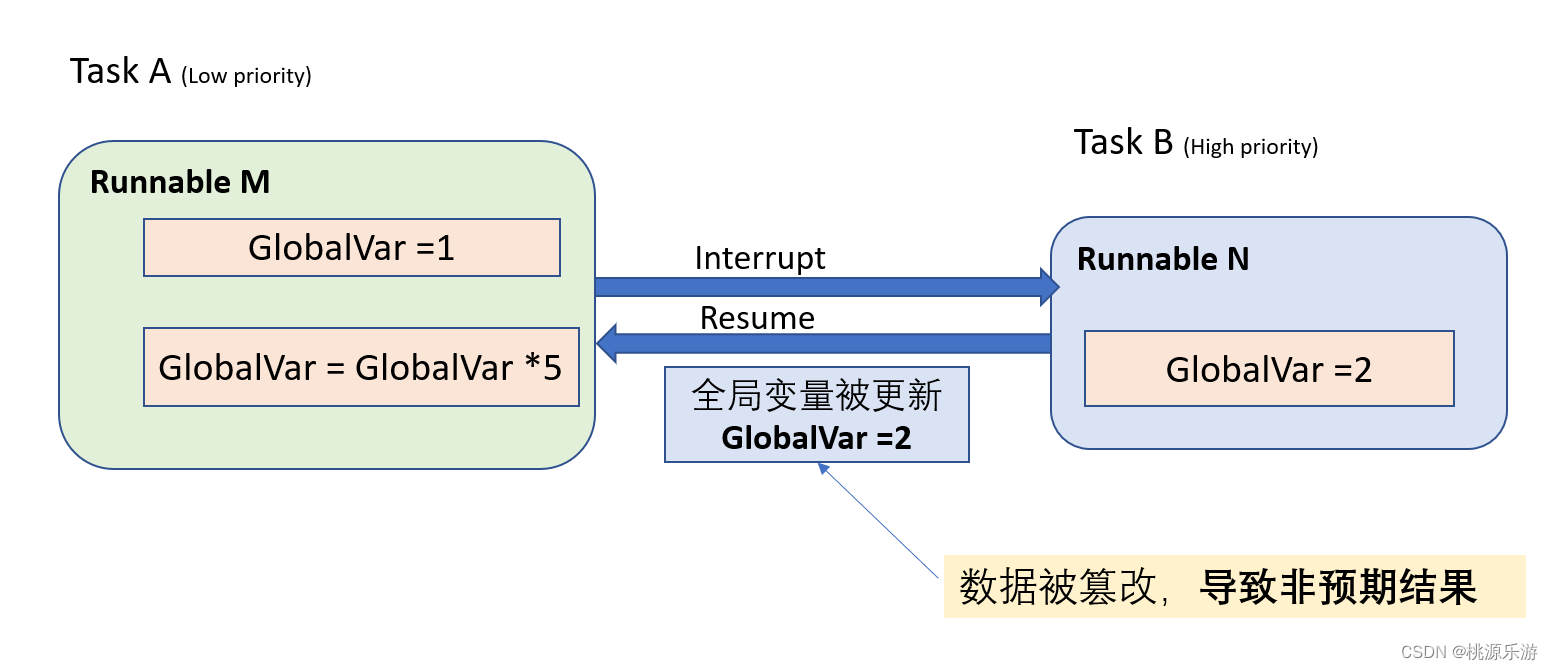

一、 数据一致性问题引入

数据一致性:当多个操作同时读写同一个数据,由于任务的抢占,出现了数据被篡改的情况,造成非预期的数据结果。

在抢占式调度RTOS系统中,可能会出现任务抢占导致的一致性问题:

例如:有两个Task,低优先级Task A和高优先级Task B, Task可抢占式调度系统。

Task A想要计算Var的值(预期结果应为Var = 1*5 = 5).

- Task A:先给GlobalVar赋初值1;

- Task B :因优先级高,Ready后打断A,给GlobalVar赋了新值( GlobalVar = 2);

- Task A : 在Task B执行结束后继续运算,运算GlobalVar 出错:

预期值:1*5=5.

实际值:2 *5 = 10

以上例子中,由于高优先级任务的抢占,导致了数据被篡改,从而引起非预期结果。

二、 数据一致性的管理

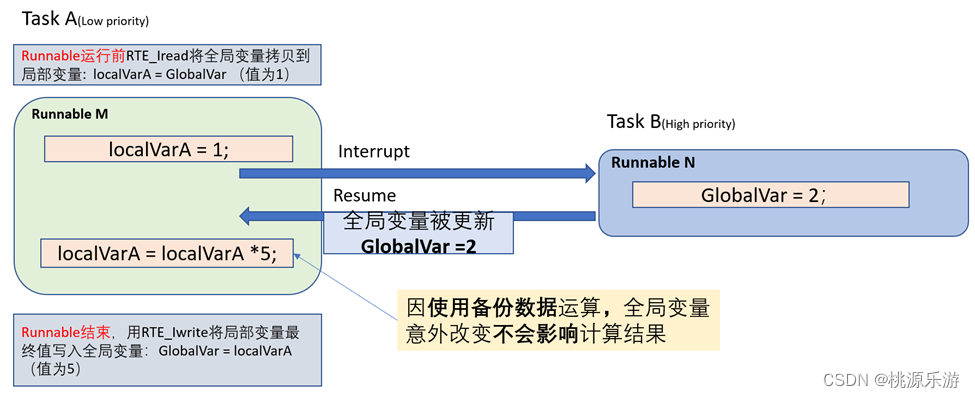

2.1 RTE管理 (SWC间)

适用场景: 不同SWC的Runnable访问数据,一般RTE机制自动实现,比如IRead和IWrite

方式:通过操作备份数据而不是原始数据,来防止数据被篡改。

示例代码:

Rte_IRead_<r>_<p>_<d>();//runnable运行前,读取到本地备份数据

User Code //操作备份数据

Rte_IWrite_<r>_<p>_<d>();//runnable结束后,写入备份数据

针对第一章中的数据篡改示例,实施RTE管理的效果图:

RTE管理步骤:

- Task A :Runnable运行前Rte_Iread把全局变量读到局部变量, local Var = 1;

- Task B: 中断Task A重写值后,全局变量GlobalVar = 2;

- Task A 运算仍使用缓存数据local Var ,所以运算结果不受影响。

更详细机制介绍可以参考此前2.3章节对S/R类型接口的介绍。

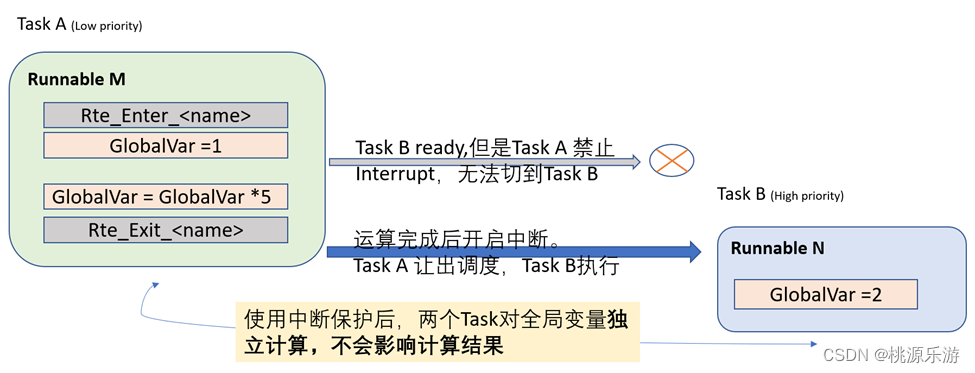

2.2 中断保护 (SWC内)

适用场景:

-

SWC内部不同的Runnable访问共同全局变量,Runnable类似C文件中的函数,这些函数如果被放在不同Task上运行, 可能出现出现同一时刻多个函数共同运行的情况。

-

如果要保护的代码段比较短,防止中断时间长对高优先级任务的影响。

方式: 通过禁用挂起所有中断、或仅操作系统中断或(如果硬件支持)仅某些中断级别来实现,因此不会出现高优先级打断的情况。

示例代码:

Rte_Enter_<ExclusiveArea>

//被保护的代码区

Rte_Exit_<ExclusiveArea>

针对第一章中的数据篡改示例,实施中断保护的效果图:

中断保护步骤:

- Task A:在操作GlobalVar 前,调用Rte_Enter_关闭中断;

- Task B :由于中断被关闭,因此即便高优先级Task B 就绪,也无法打断Task A;

- Task A:在操作GlobalVar 后,调用Rte_ Exit _开启中断。

- Task B:调度随即被Task B抢占,开始对GlobalVar新的操作。

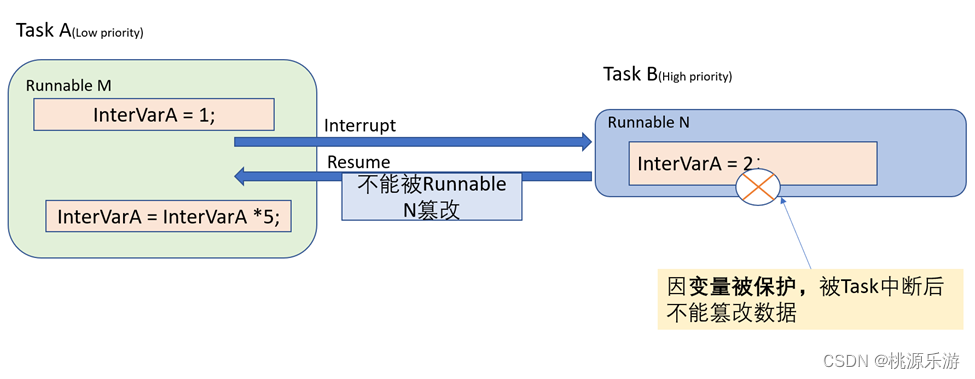

2.3 变量保护IRVS (SWC内)

适用场景: SWC内部变量保护,限定局变量的作用域在变量的作用域在SWC的不同runnable间。

方式: 被限定的Runnable尝试变量时被限制。InterRunnableVariables在一个AUTOSAR软件组件内的runnable之间建立,所以只能被组件内部的访问。同时可以配置runnble访问范围,示例InterVarA变量只能被Runnable M访问。

示例代码:

Rte_IrvWrite_<r>_<v>

Rte_IrvRead_<r>_<v>

针对第一章中的数据篡改示例,实施变量保护的效果图:

变量保护步骤:

- Task A :赋值InterVarA =1

- Task B: 中断TaskA尝试重写值InterVarA,由于变量被保护,重写失败

- Task A :运算使用InterVarA 继续运算,运算结果不受影响

2.4 Task分配

将访问全局变量的runnable放在同一个Task中,这样runnable只能顺序执行,不会出现高优先级打断的情况,数据一致性就可以得到保证。

2.5 任务抢占控制

可以通过为受影响的任务分配相同的优先级,为受影响的任务分配相同的内部操作系统资源,或者将OS的任务配置为非抢占性来实现

传送门 点击返回 ->AUTOSAR配置与实践总目录